Picture this: You’re about to present regional numbers to the board. Your report shows a solid increase in sales, but something doesn’t feel quite right. As the figures get pulled up, you notice discrepancies between your regional report and the executive summary. The Regional Manager’s report is showing one number, while the Executive team’s report is showing another. Suddenly, the trust you’ve worked hard to build in your reporting is on the line. What went wrong, and how can it be fixed?

This situation is all too familiar for BI professionals. With reporting tools like Power BI, it’s easy to get caught in a web of misaligned models, conflicting data, and multiple stakeholders. While the data can be incredibly powerful, it’s only as reliable as the underlying systems and logic. When the numbers don’t match, the value of your reports can be totally undermined.

Two Reports, Two Different Numbers - Who’s Right?

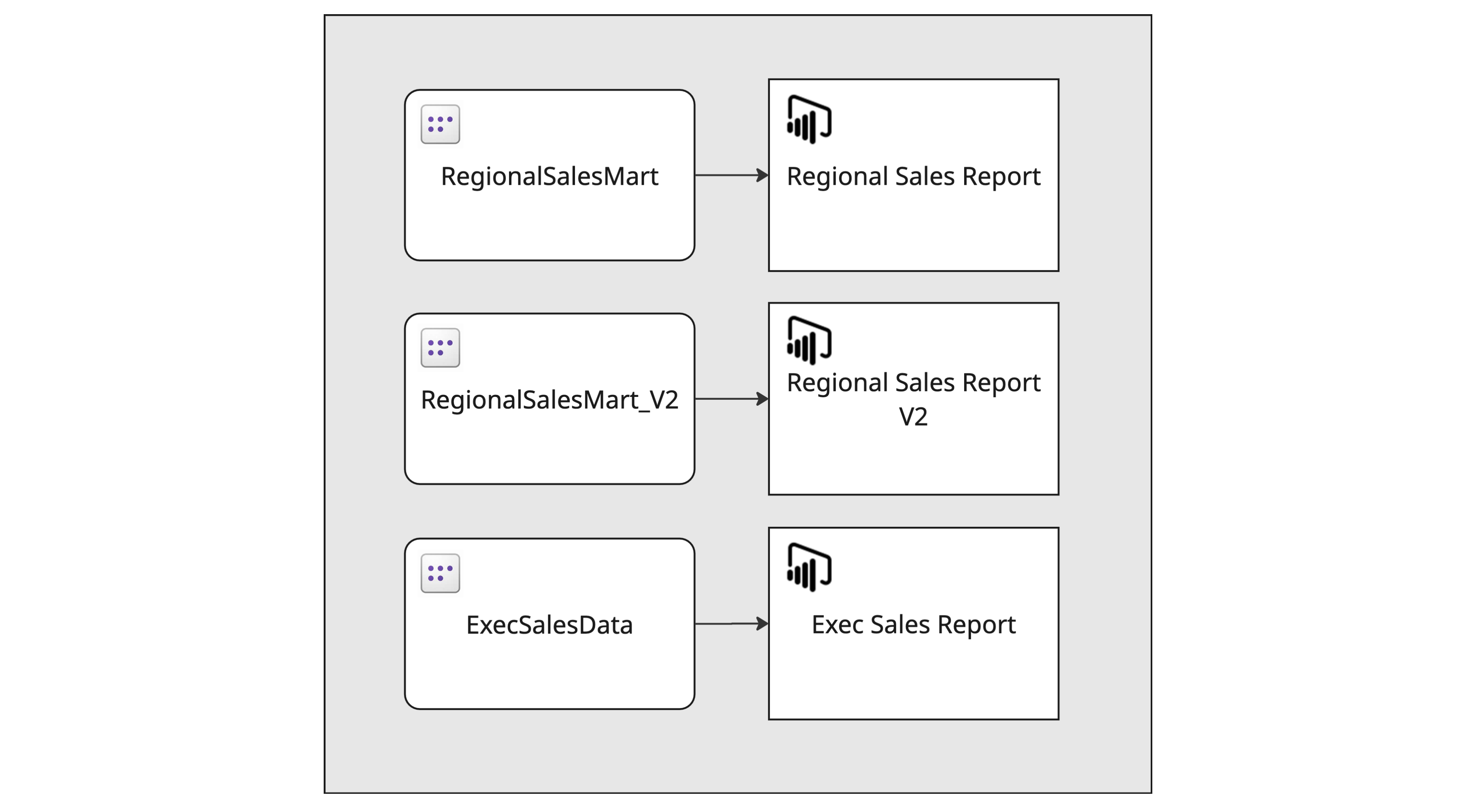

Here’s a typical scenario. Developer A creates a regional sales report, while Developer B works on an executive-level report. Both reports are well-received, but as requests for new KPIs and updates start coming in, things begin to unravel.

Developer A notices that some sales data includes irrelevant transactions, such as stock being written off in stores. Eager to fix this, Developer A updates the regional sales report. Meanwhile, Developer B is adding new KPIs to the executive report. Although both developers are working independently, their changes end up causing problems.

Developer A creates a new version of the report with a new semantic model, while Developer B adjusts their existing model. After both reports are published, the Executive team notices that the sales numbers don’t match the Regional Manager’s report. The Executive team is unhappy, and the Regional Manager asks Developer A to create an executive-level version of the regional report. But when that new report arrives, it lacks the KPIs the Executive team had been using. The Executive team starts disengaging, preferring manual calculations over trusting the report.

What went wrong? The mismatch occurred because both developers were using different logic and models. Without a single, centralised source of truth, inconsistencies arose, leading to confusion and frustration.

How Data Engineers Can Restore Trust in Reporting

This is where Data Engineers play a crucial role in ensuring reliability. Their primary responsibility is to build and manage the foundational models that BI Developers rely on for consistent, reliable data. In this case, the clear problem is that there are too many semantic models and different logic being applied to each. The Data Engineer would centralise the data into one semantic model, with predefined relationships and standardised KPIs, ensuring consistency across all reports.

Rather than having separate models for regional and executive reports, the Data Engineer creates a unified model that everyone can use. This model processes all data, including updates like stock write-offs, in a consistent manner. By centralising the logic, developers no longer need to create their own models, preventing the misalignment seen earlier.

Data Engineers would expand on the functionality of this semantic model within the DEV workspace, introducing new data filtering logic, KPIs, or changes to KPI logic. BI Developers can then adjust their Power BI reports in the DEV workspace to reflect any necessary updates on the front end. Once the changes are made, the updated model can be promoted to the TEST workspace for validation, where BI Developers and supporting team members ensure the changes meet expectations. After validation, the model is deployed to the PROD workspace, ensuring it’s ready for production use.

With a structured, centralised model, the Data Engineer ensures that reports are consistent, reliable, and trustworthy, allowing BI Developers to focus on refining the front-end reports with confidence.

Here’s what it looks like in action:

What Data Engineers Would Consider

As they move forward with implementing the updated model, Data Engineers would ask themselves several key questions to make sure their development process is robust and their outputs are trustworthy.

Is my code backed up in a repository? Can I create feature branches for new development work?

Do I have an environment/workspace for Dev, Test, and Prod?

Do I have clear definitions of KPIs, data source criteria, my dimensional and fact tables, etc.?

Do I have any data validation processes in place to ensure my figures are correct? Is there an aggregated view I can validate against to confirm my figures match reporting? For example, using an SAP BW Query.

Streamlining the Reporting Process with Microsoft Fabric

Once these key aspects are addressed, the Data Engineer can implement a solid action plan using the tools available in Microsoft Fabric to streamline and optimise the reporting process.

Set up Dev, Test, and Prod workspaces.

Set up a DevOps repo with a Dev branch.

Adopt the Lakehouse Medallion approach to data ingestion, curation, and reporting layers.

Create a semantic model from the Gold (Reporting Layer) Lakehouse, making use of Direct Lake tables. This allows the model to pull in the latest data, enabling end users in Power BI to see the most current reporting data.

If there’s an additional source for an aggregated view of the data being ingested/curated, use it to cross-validate figures. These figures can be exposed to Power BI measures, which will allow data validation dashboards to be created, highlighting any discrepancies in the data.

Conclusion

As Data Engineers take on the responsibility of modelling, they can refine and enhance the process to be more controlled and iterative. BI Developers can now organise Power BI reporting suites into separate workspaces, drawing from a centralised Group Reporting Data Hub model. This makes it possible to create custom apps for different user groups – such as an Executive App, Operations App, and Field Team App – each with its own workspace, while still maintaining the ability to manage Dev, Test, and Prod environments for Power BI reports.

With Microsoft Fabric, Data Engineers can simplify this process even further. By leveraging Direct Lake functionality when using Lakehouses as a source, the need for regular semantic model updates is eliminated. The deployment pipeline guarantees a smooth transition of assets from Dev to Test to Prod, streamlining the entire workflow.

Below are top level cards highlighting the key features of a Data Engineering approach to semantic modelling. An example has been provided for Microsoft Fabric as well as Synapse. This shows how we can support clients with reporting implementation; the golden asset from the below being a single source of truth for reporting.

Synapse Implementation

Fabric Implementation

Ascent’s Microsoft Fabric Accelerator

At Ascent we supercharged our development processes via our Microsoft Fabric Accelerator, creating support assets to help with ingestion, curation, and the reporting layer. Our repeatable patterns remove the complexity of ingestion, taking pain points in coding away.

Our parameter-driven pipelines give our clients the ability to build ingestion and curation with no code. Our forward-thinking solution can handle non-schema and soon to be GA-schema based Lakehouses as sources and destinations. As we onboard new Microsoft Fabric workstreams, our Accelerator continuously evolves, where we are taking learnings from projects and add new functionality to our Accelerator.

Using our Accelerator, we/our clients are then able to build generic data solutions. An example could be having an API data source with multiple table entities that need to be ingested, with potential dependencies on each table. We call out generic pipelines and wrap around it, to create a solution for a particular API data source that can then be applied to any client.